I’ve been experimenting with Blue Iris and CodeProject AI in my lab to see what type of performance I would get utilizing Intel on-board CPU-GPU (Intel 630) and an Nvidia GTX 1650 4GB. The machine I’m using to test this is an HP Elitedesk 800 G3 Small Form Factor (SFF) with 32GB of RAM on an Intel i7-7700. The storage is a combination of NVME, SSD, and RAID-0 Western Digital purple.

From an “opinion based perspective”, I was seeing really good performance with just the Intel 630 onboard CPU-GPU. Once I added the Nvidia and moved three 4K-capable cameras to using the Nvidia, I noticed performance go from really good to great. Some of the 4K cameras view a large field of hay. With the Intel onboard GPU, I could see the grass moving but it was slightly delayed as I looked out of the window and watched Blue Iris simultaneously. Once I put those cameras on the Nvidia GPU, there was almost no delay and I could see the individual grass strands (?) blowing in the wind. Opinions sometimes carry more “weight than the law” so this is a winner in my book. Even with just the Intel it was doing really good.

There are quite a few threads on Reddit and IPCamTalk forums about how to determine which GPU is being used. I was able to see the GPU in use per camera using the Blue Iris Status window ( |_ with an arrow pointed to the top right) which is under the HA (Hardware Acceleration) column.

HA: Hardware acceleration. When a value is shown, the camera is currently using hardware decoding (I=Intel, I+=Intel+VPP, N=Nvidia, DX=DirectX, I2=Intel Beta).

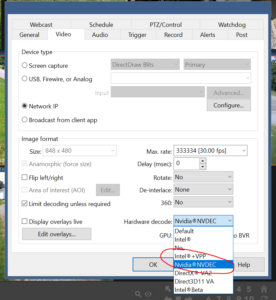

In order to specify which camera HA I was using, I edited my 4K cameras to use the Nvidia GPU.

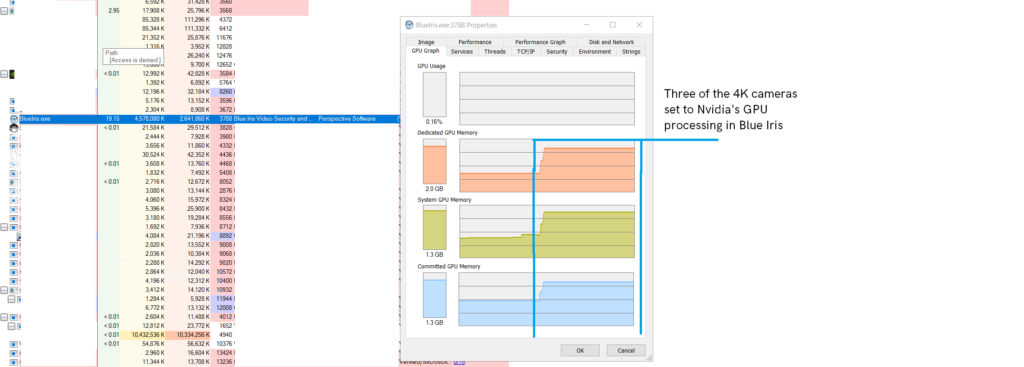

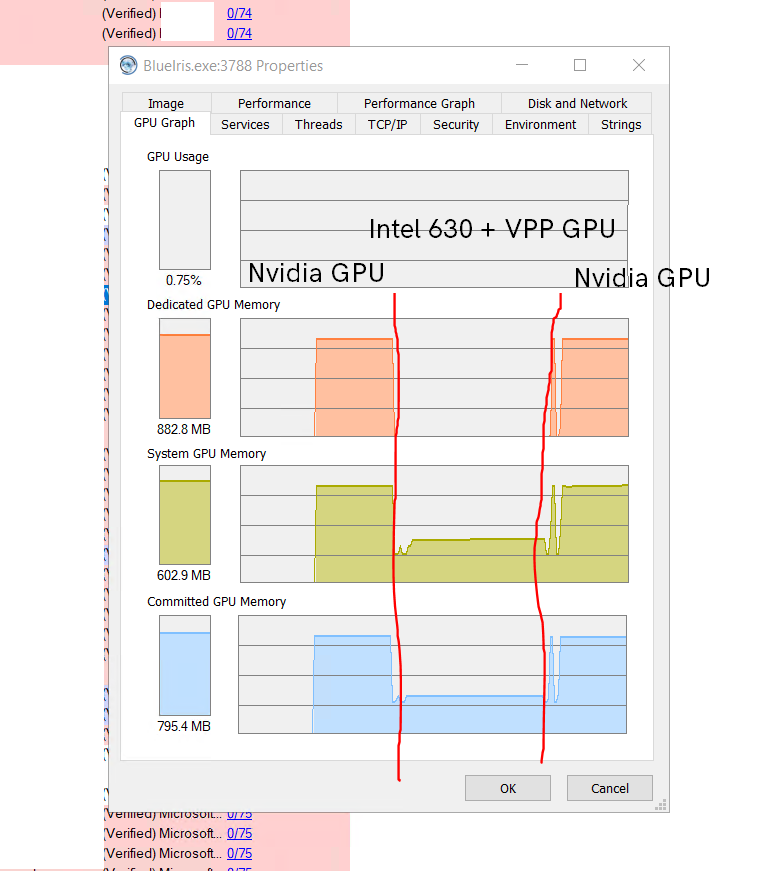

I switched between Intel+VPP and Nvidia and this is what ProcessExplorer showed as far as GPU utilization.

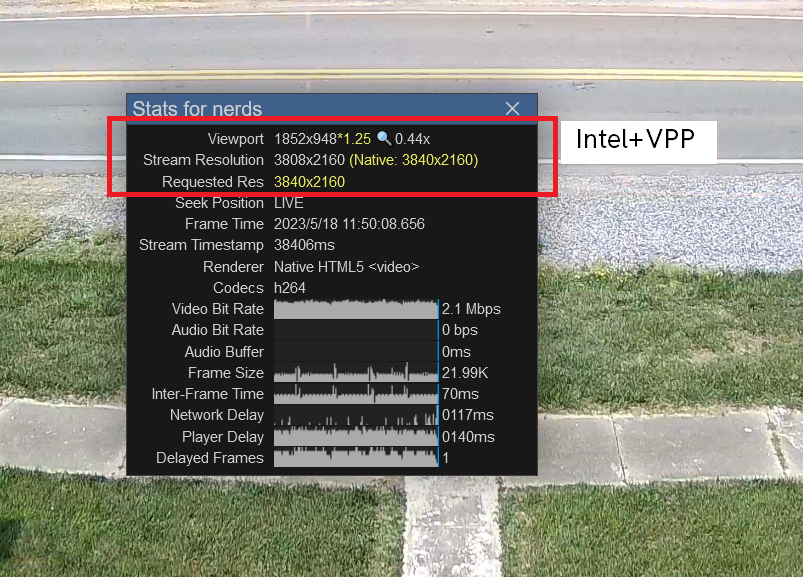

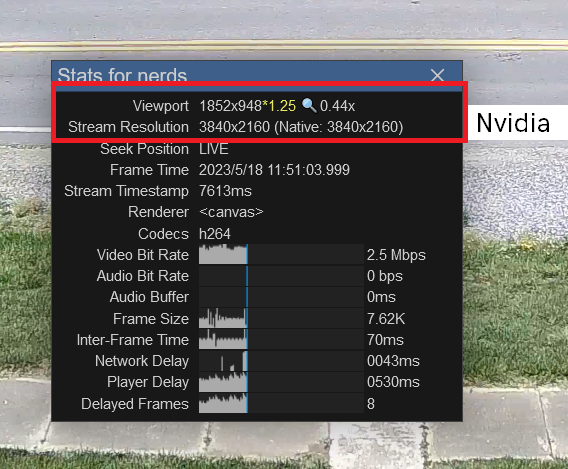

When I added a third camera, I viewed stats in real time. I noticed a difference between requested resolution using Intel+VPP versus actual resolution using Nvidia.

I’m also using CodeProject AI in a Docker container running on the Elitedesk 800 G3 and I noticed an improvement once the Nvidia GPU was added. I did have to set my Docker instance to use all GPUs but it was easy enough. Two big improvements when using the Nvidia GPU and the Docker setup: 1) the modules in CodeProject stopped crashing. Before using Nvidia, the modules kept crashing and restarting. 2) The AI processes much faster. Although I don’t have a baseline screenshot of CodeProject using Nvidia, I did notice it was using about 300-350 MB of GPU RAM before I started adding cameras to use Nvidia so the footprint is really small.

As always, many thanks to my friend (and author!) Blaize for letting me bounce around ideas.